What’s currently top of mind for me:

AI is advancing insanely fast. Faster than any of us ever expected.

The CEO of Anthropic predicted that by the end of 2025 over 90% of all software will be written by AI.

Whether that number proves true or not, the trajectory is clear.

I don’t think enough of us in university are thinking critically about the implications of this, as it will radically disrupt our lives in the next decade, regardless of what industry or profession you are in.

I believe the goal post of what is deemed “AGI” will be continuously pushed back each year as these models continue to shock everyone with the sophistication of their outputs.

But we only arrive at better outputs with better inputs. I find myself often questioning the ethics of data acquisition that these incumbents are taking to advance their models at such a rapid pace.

So what does this mean for the future?

There no longer is a “moat” for design or development. These highly differentiated products are heavily predicated on the data they have access to and their ability to extract maximum value from it as an input. Thus creating better outputs as a result.

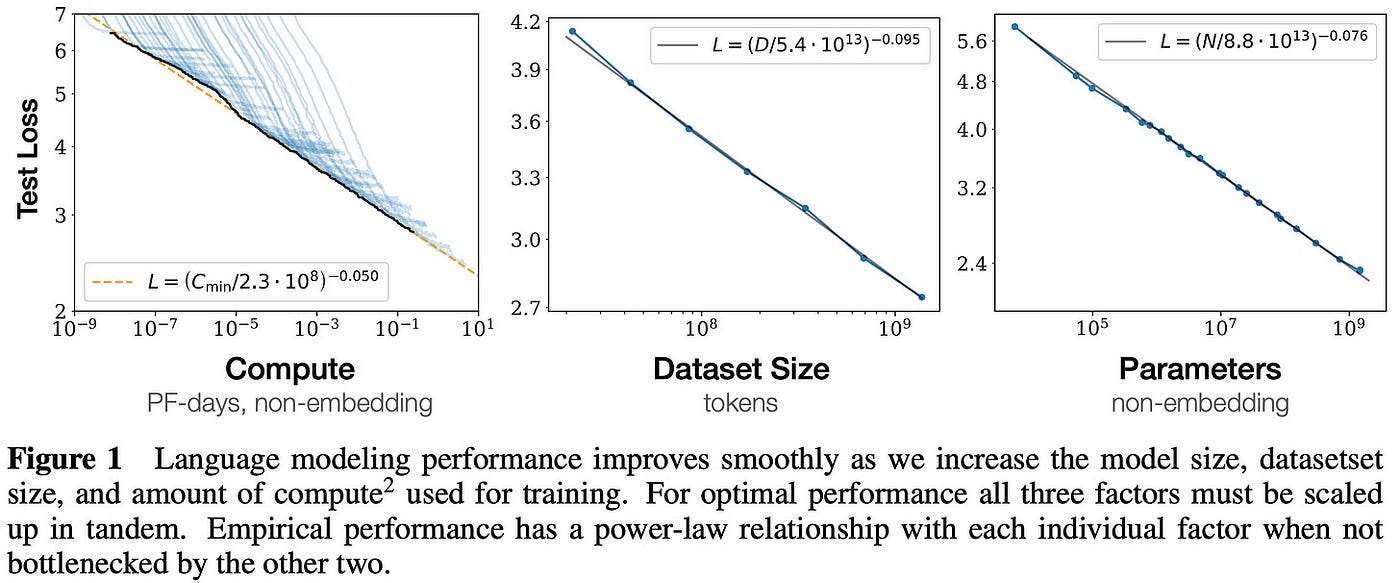

It’s only logical to assume that at the exponential scale these models are improving, their data inputs are exponentially scaling with them.

Early GPT-3 models were essentially trained on the general internet and the majority of books in existence. So what new/untapped data is left to continue this exponential curve?

We’ve seen stagnation in the innovation of many of these American-based tech companies. With models from Deepseek, Alibaba, Manus, etc. outpacing the growth of Google, OpenAI, xAI, etc.

I think it’s inevitable that a startup will be born in the name of governing over the 149+ zettabytes of data that currently exist on the internet.

It’s currently impossible to detect whether an artist/designer/animator’s, etc. work was used to train a model — unless you’re a prominent figure with extensive high-quality public work such as Hayao Miyazaki.

The decoupling from ethics has begun

The most prime example of this has been demonstrated recently with OpenAI’s update to GPT-4o image generation. It’s safe to assume that the model was trained extensively on the life work of Hayao Miyazaki in order to accurately create “Studio-Ghibli style images.”

We can only speculate on if Miyazaki agreed to this and was compensated, or if his public works were simply scraped into a database.

I fear the line of what constitutes ethical data practices will continue to be blurred amidst this AI rush we are in.

I hope those of us who have the privilege to work on these tools remain cognizant of how these injustices may present themselves and actively work to mitigate them.

Having these conversations is critical. Suffocating the injustices we commit with innovation will prove to cause more harm to society than good in due time.

I’m largely shaken up by these recent events surrounding GPT-4o’s new image generation. It signals to me a decoupling from technology and ethics. This should have people in these spaces seriously concerned.

A future where these corporations feel entitled to do as they please with data that is not theirs, so long as it’s creating value for users and shareholders, is a scary future.

This is not just an opinion

OpenAI added over 1M paying users in a single hour after Studio Ghibli-style images generated with GPT-4o started trending everywhere. The biggest spike in paid users since the launch of their paid plan. Which ultimately allowed them to raise $40B at a $300B valuation a week later.

That should tell you everything. Most don’t care how the model was trained. They care about cool outputs that grow KPIs they can brag about.

I’m one of the most optimistic people about the future use-cases of AI and what it will enable us to do. But we must ask ourselves: at what cost?

It seems those in power don’t care about the cost, but rather endlessly one-upping each other on the metrics of their new LLM iteration every quarter: all to convince VCs and PE that they are getting a return like no other on their investment. Therefore allowing them to raise more money at an inflated valuation, enabling the cycle to continue.

My ethical dilemma

In short, I’m conflicted with where I stand in all of this.

Being an incoming UX Designer at Microsoft, I can’t help but think I’m enabling this malpractice. But having no power to push real change in this industry feels asphyxiating.

Nonetheless, it reinstates vigor in me. I’m eager to do all I can, with the little power I have. Except I don’t know where to start or how.

Thus, I feel documenting my thoughts, opinions, and perspective is the least action I can take — in hopes that I’m not alone in this sentiment.

more from me soon :/